Insights / Articles

Measuring success through digital innovation: how to gather good data to evaluate your training program and track the impact of technological enhancements

Written by Briony Frost, Learning & Development Specialist and Dominika Bijos, Senior Learning Designer on Thursday, August 5, 2021

Evaluation is crucial and the payoffs are high: solid data encourages stakeholders to invest in future training and making sure that training meets everyone’s needs and expectations. Yet, it is often overlooked and undervalued, dismissed as qualitative, subjective, and difficult to get people to engage with, and rarely do enough time and resource go into its planning. In this final article of our series, we are looking at the Evaluation phase of the instructional design systems process, ADDIE, and how to digitally innovate for maximum payoff from your training program. We will discuss how to plan and execute meaningful training evaluation, including what and when to measure, and most importantly, what we can learn from it.

Measure the impact of training so everyone knows it’s worth it

Whether you organize, facilitate, or participate in training, you need to know that it will be worth the investment of time, money, and energy:

- As a learner, you want to see evidence that your commitment is being rewarded and that you can do your job better than before, to maintain your quality of services and progress in your profession, to support your healthcare practitioner colleagues effectively, and through them, improve standards of care and experience for patients

- As a facilitator, you want to know that your training programs are having the desired effect, that your learners can meet the intended outcomes that will enable them to develop and enhance their knowledge, skills, and confidence, and that you’ve provided a positive and productive learning experience

- As a person responsible for instigating training

for your staff, you want to know that you are maximizing the benefit of that training for your team, that the budget and time spent on getting the program ready have literally paid off, and that you can report back to investors and learners with measurable progress against learner key performance indicators, company critical success factors and strategic priorities, and hopefully secure funds to meet future training needs

The big question is, how can this be achieved? To create evaluation that answers the questions stakeholders might have, we need to know what we need to measure to show effectiveness and impact, when we need to take those measures, and how we measure them. Let’s tackle those points in order.

What do we measure when evaluating training?

To measure if the learning experiences were meaningful, evaluation needs to span the entire training program to gather several types of information that fall under five key measures (stages), best summarized using the Kirkpatrick/Philips model of evaluation:

1) Return on expectation (RoE) – has training met the stakeholders’ expectations?

2) Participant reaction – have suitable conditions been created for learning to take place?

3) Measure of learning – did learning actually take place?

4) Job impact – has on-the-job performance been improved by the learning?

5) Return on investment (RoI) – has the upskilling of staff, improved job performance, strategic priorities, etc., justified the costs of investment in the training?

Assessments and evaluations combined are a true measure of the program

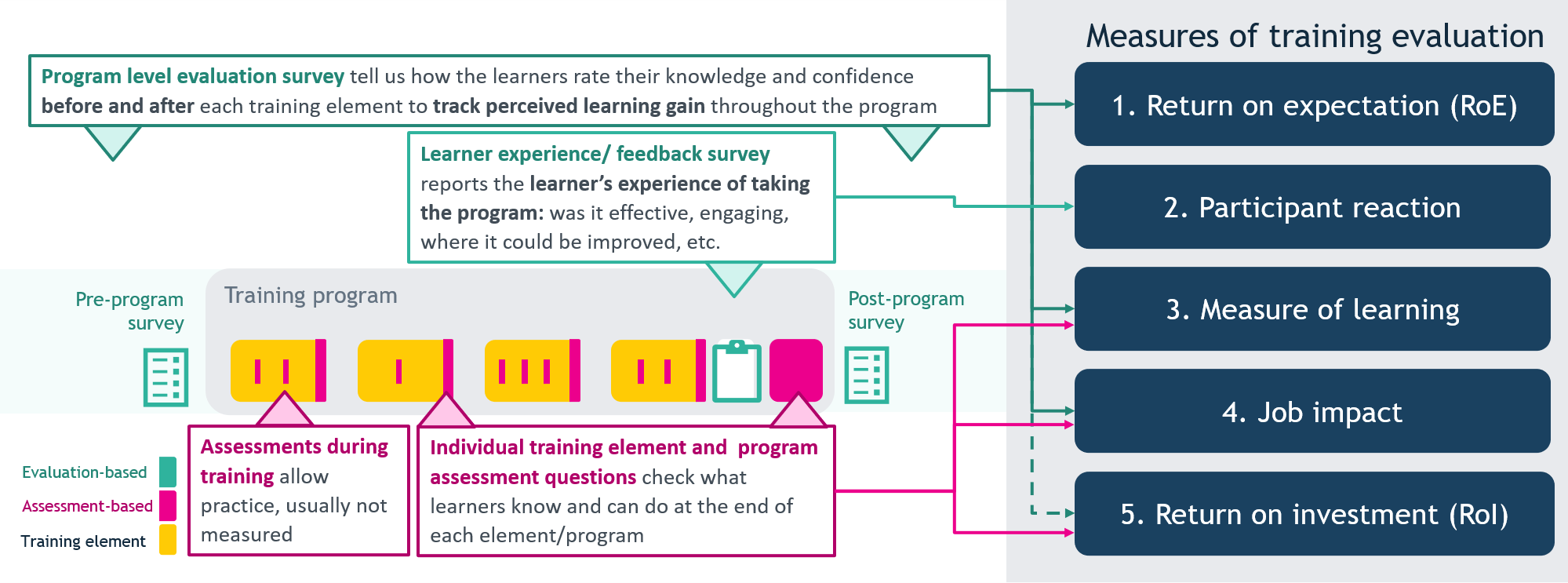

We can gather data for any of the five measures using either assessments, such as knowledge check, multiple-choice, or scenario-based tests, or evaluations, such as seeking feedback on the quality and impact of the program. Designing evaluations and assessments to answer the questions behind the five key measures and positioning them at different stages of the training program is a way to gather meaningful information. Let’s consider how this can look in practice (Figure 1).

Let’s say we start with a program level evaluation survey. It looks at return on expectation (RoE), measures learning and job impact, and contributes information we can use to see return on investment (RoI). In this survey, learners are asked to rate both their knowledge and confidence

before and after each module (or program) to track perceived learning gain throughout the program.

Next, we might include a learner feedback survey, which specifically targets learners’ reactions to the program. It is a separate set of questions and asks learners to reflect on their experience of participating in the program, such as whether they thought it was effective, engaging, enjoyable, too short/long, or too detailed, and where it could be improved.

Finally, module and program assessment questions are a direct measure of learning and contribute to our understanding of the job impact and RoI. The same assessment can be repeated after a specified period of time to see how much knowledge has been retained. The results can be checked against what learners thought they could do when answering questions from a pre-program evaluation survey to see if they self-evaluated accurately and whether they are now able to meet the standard of learning expected of them.

Assessment questions are usually incorporated into training as there is a ‘pass’ level required for compulsory training within pharma but, often, the opportunity to combine it with training evaluation is missed and so too are insights into mismatched expectations from any of the stakeholders, or misalignments with the original needs of the learners.

Choose key measures, but listen to your learners

A comprehensive evaluation of training effectiveness involves appraising impact at all five stages. However, it is equally possible to select the measures that are most relevant to your organization’s priorities and interests. There are a variety of other training evaluation models available too, and it is possible to combine approaches to create a bespoke picture that is best suited to your organization’s needs.

A word of caution is worth sharing here: although it is often tempting to skip participants’ reactions as it does not necessarily correlate with job impact, enabling your learners to feed back on their experience not only provides valuable information to enable you to improve future training offerings, but makes learners feel as though their voices and engagement in the training matter, which can assist with maintaining motivation for ongoing professional development.

Furthermore, to sustain engagement in the post-COVID hybrid learning reality, we need to remember that learners are human and need to feel like they belong, and their company cares about them. Now it is crucial to remove any technical or practical issues that arise in the first few iterations of hybrid events to set good standards for how these events look. For example, remote workers fear that they will be disadvantaged by not attending in person. Learner participation in future training is likely to improve if you consider their feedback and respond by making changes to enhance the experience – few people wish to sit through tedious or unpleasant training experiences face-to-face or virtually, whether or not it ultimately improves their performance!

When? Plan evaluation from the start of your training program

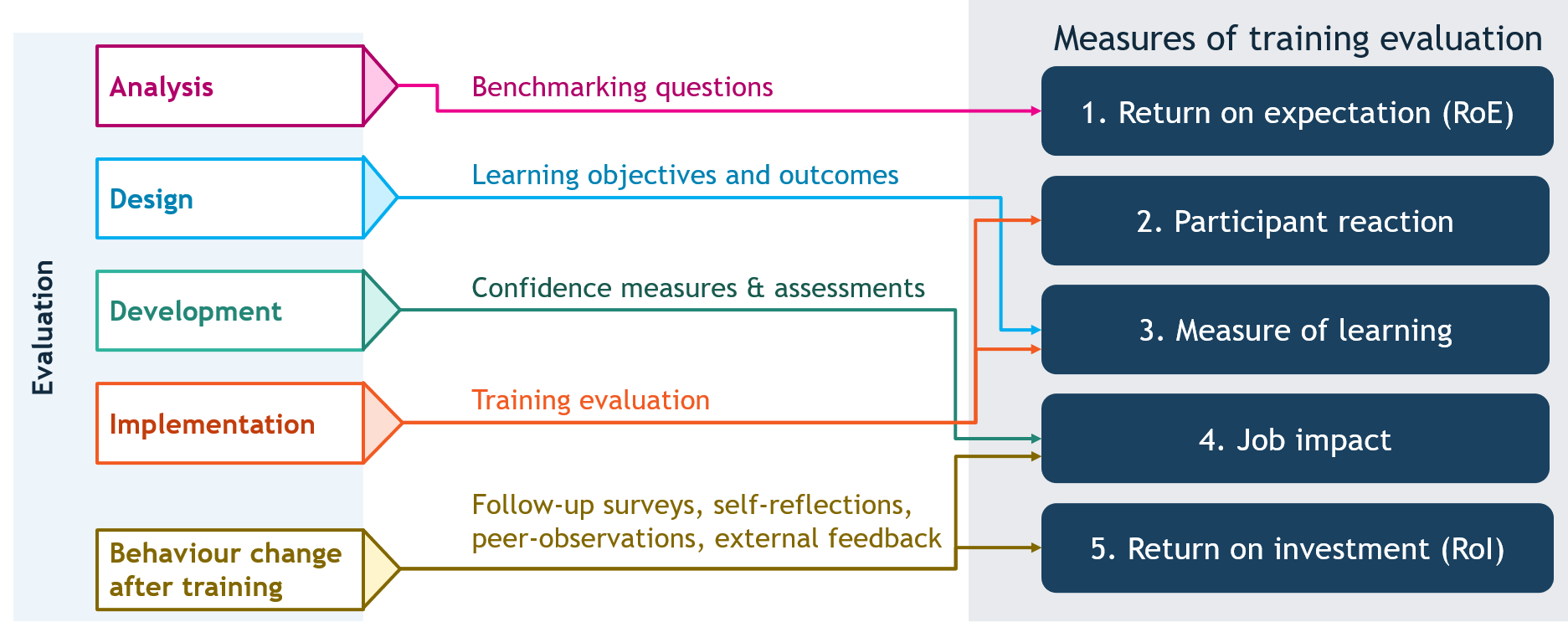

Although Evaluation is the last step in the ADDIE framework, it is something we plan for during the analysis and design phases, continue throughout the development and implementation phases, and extend past implementation and completion of training. This integration of evaluation throughout the entire training program allows us to gather several types of information that fall under the five key measures we’ve already discussed.

Firstly, during the needs analysis, start planning for evaluation of RoE. Create quantitative benchmarking questions that your learners can answer upfront that they will then respond to again at the end of the training to measure distance travelled from initial levels of knowledge, skills, and confidence. Including qualitative answers here will give a more comprehensive picture of learners’ progress and experience.

Secondly, during the design stage, construct clear, shared, and measurable learning outcomes from your objectives, which can be tested through specific knowledge and skills assessment points during and at the end of the program.

During development of the materials, include confidence measures throughout the training to keep track of increases and declines. Confidence can be aligned to the results of graded assessments to see whether confidence and learning gain rise or fall together, to identify areas for further training development or revisions to the program itself. These activities will help you to assess whether you have met learner expectations and whether learning gain has been achieved.

Finally, job impact and RoI are methods of measuring training impact that extend beyond the end point of the training program. Job impact is reflected in measuring behavioral change, which can take place through activities, such as:

- Follow-up surveys to reassess the original measures of learning gain and expectations

- Self-reflections are part of professional development activities

- Workplace and peer observations of job performance, with the relevant training program considered

- Reports and insights gathered from external colleagues, such as HCPs

How do we measure? Tracking the impact requires design and tools

To be able to use the data to gain insights and present them to relevant stakeholders, there are a few elements that can help you:

- Survey question design: key to obtaining useable data lies not only in the ultimate breakdown, but in the timing of data gathering and the data that is sought in the first place, so get a survey specialist on board if you can! It’s also worth using a good quality survey platform as these will often provide basic data analysis for you, enable you to link questions to allow learners to build on previous answers, to skip not applicable questions, and to use a range of question types, which standard Word templates rarely support

- Assessments that build on complexity: assessments should be built with the idea that learners can encounter, practice, and master different types of assessment questions throughout their learning journey. Question difficulty will likely escalate within a training element, or where content crossover between elements allows. Thus, starting with the last element and working back, or starting with the first element and working forward, is more effective and easiest way to do it. Using an assessment app can support learning gain data gathering as a discreet evaluation element, and synchronizing it with a learning dashboard helps support effective data presentation throughout training

- Data type: combining quantitative and qualitative data provides a broader and deeper analysis of your program’s impact, enabling you to feed back more effectively to your stakeholders in response to their specific interests with regards to the five possible areas of evaluation. Invest in digital tools that allow for mixed methods data analysis to get the fullest picture of your program’s success

- Levels of information: audience is crucial here, so think about who you will need to feed back to and what they will want to know, as the data gathered can often be cut and presented a number of different ways to give your stakeholders the insights that are most valuable to them. Work with your learning provider and digital teams to create clear, succinct, aesthetically pleasing data summaries

to improve engagement and understanding

- Data gathering analytics: learning providers will often have digital tools and specialists that they can draw upon to help you gather, process, and display the information to different audiences. Learning management systems and survey platforms may also have built-in analytics that you can use to speed up the process. Additional information, like time to completion of an element or speed with which learners answer questions, might give you important clues about chunking of content or complexity of the assessment

- Data presentation: your organization and stakeholders might have a preferred way to receive information. For your learners, it may be motivational to include a good learning dashboard that enables them to track their progress

and gains against the original learning outcomes, ideally situated within the context of their wider learning journey and their professional development goals

The “so what?” of your evaluation effort

All this is designed to ultimately give you the answer to whether the training is worth the investment of time, money, and energy.

- As a learner, seeing that your feedback is valued, considered, and implemented, as well as seeing a dashboard mapping your learning journey, is a great incentive to stay onboard and engaged with training, while future learners will likely benefit from an improved experience as a result of changes you’ve made to eliminate the teething problems, especially in the new hybrid environment

- As a facilitator, seeing feedback that learners appreciate and improve through training, and that what you are doing has the intended outcomes, enhances your confidence in delivering a quality learning experience. Good evaluation will also provide insight into why training might miss the mark, and thus point to specific areas that need to be restructured or improved. Learners’ feedback might also point to future training opportunities

- As a person responsible for instigating training for your staff, working with RoE and RoI will make future decisions on budget and time data driven, and your reports will include measurable progress. It can be valuable to track points of particular investment in digital tools and platforms as we step forward into a future of hybrid working to see which ones provide the biggest payoffs for your company and for your learners, to help streamline future training investments

- As an educational measure, a well-constructed evaluation might also be a requirement of professional bodies to accredit training, or it might be used as a research tool to be able to publish data, as there will be a rigorous and documented mechanism of training evaluation

Evaluation done right can show you and your stakeholders that the training effort has, or has not, paid off, all supported by data. Before you plan your next training endeavor, consider investing in evaluation. It’s worth it.

OPEN Health’s L&D team brings together a wealth of diverse staff with unique skill sets, healthcare training, and communications experience. We take pride in providing good quality measures of training impact and effectiveness that take into account all your stakeholders’ needs. If you would like to hear more about how we can help you track the RoE, RoI, and more of your training program, please get in touch.

Can we help?